Billingsley, Patrick. 1995. Probability And Measure. Wiley.

Consonni, Guido, and Piero Veronese. 1992. “Conjugate Priors for Exponential Families Having Quadratic Functions.” Journal of the American Statistical Association 87 (420): 1123–27.

Davison, A. C. 2003. Statistical Models. Cambridge University Press.

Diaconis, Persi, and Donald Ylvisaker. 1979. “Conjugate prior for exponential families.” The Annals of Statistics 7 (2): 269–92.

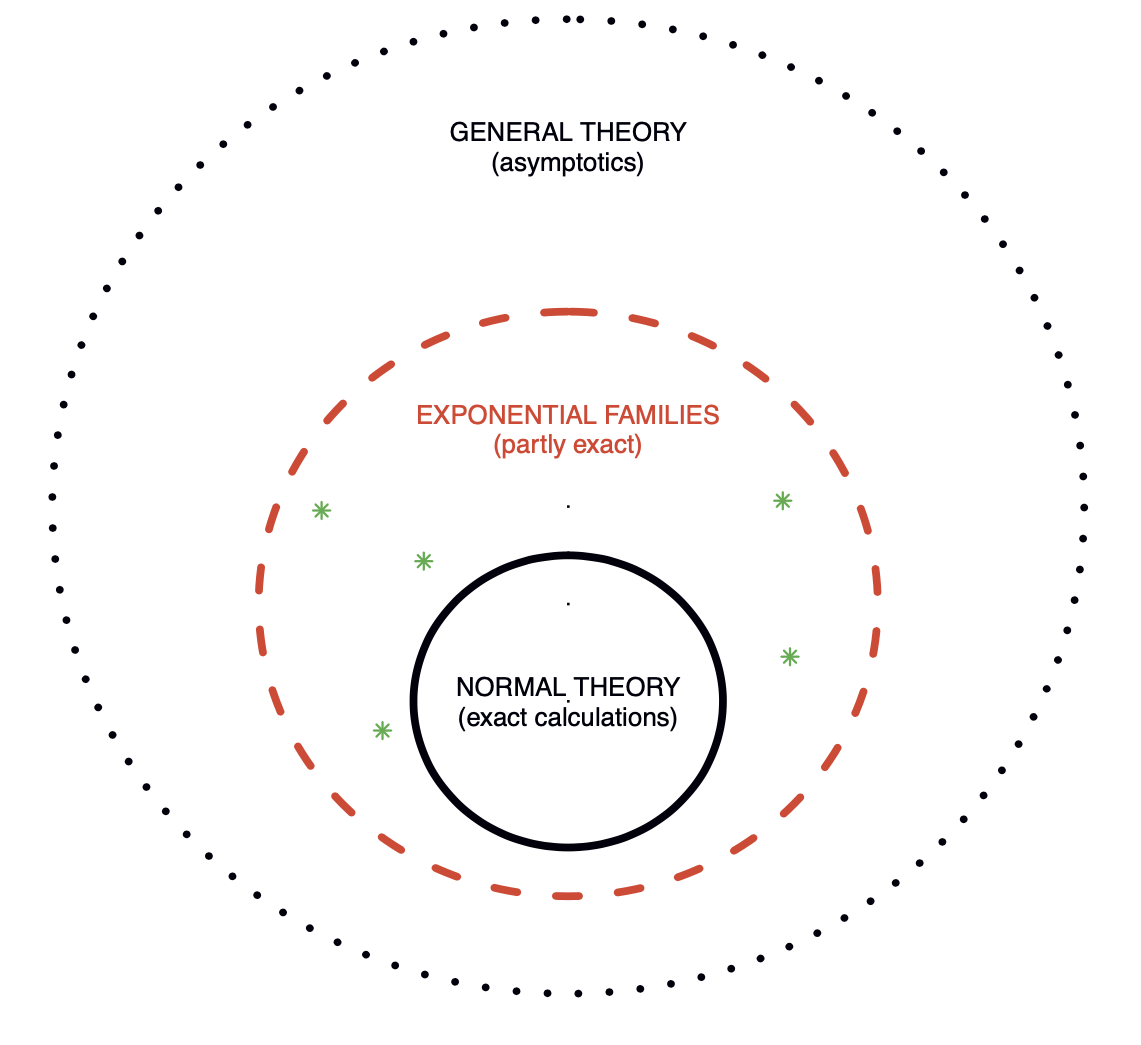

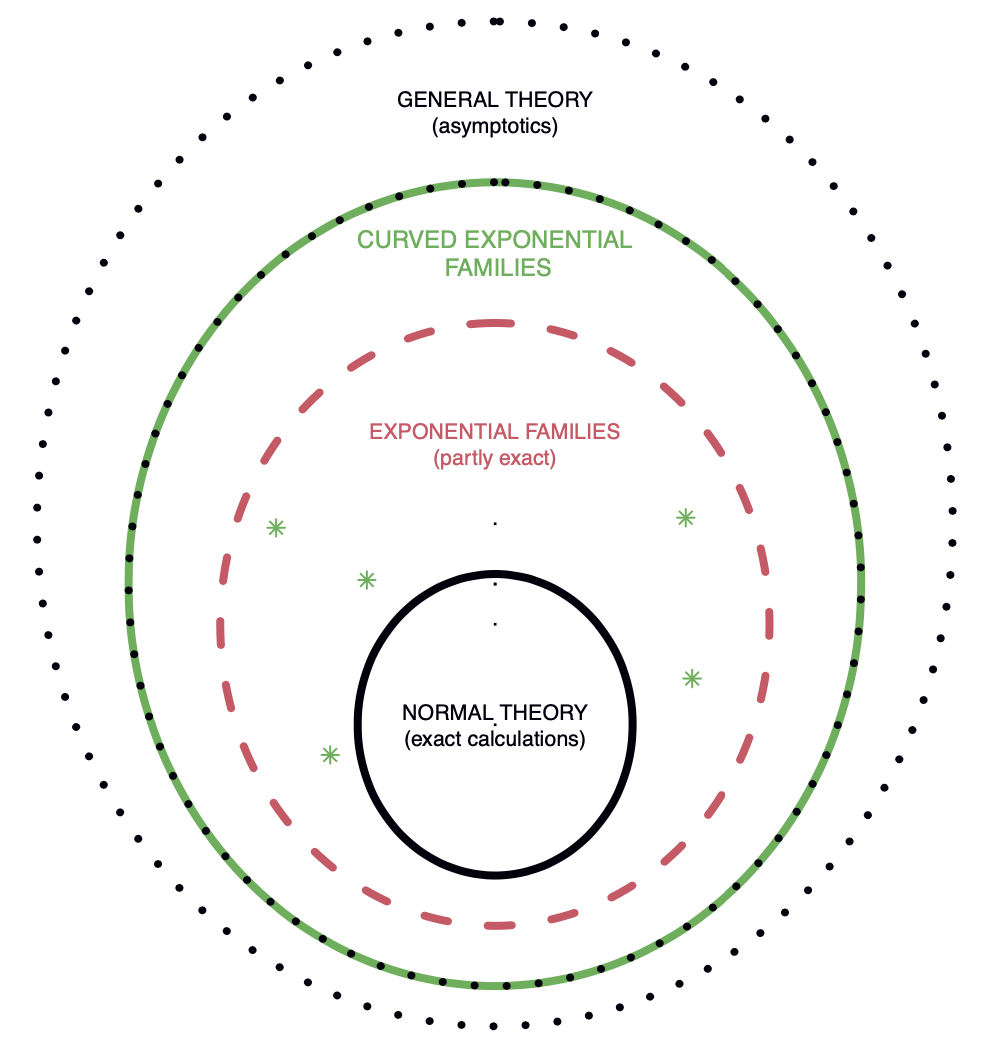

Efron, Bradley. 2023. Exponential Families in Theory and Practice. Cambridge University Press.

Efron, Bradley, and Trevor Hastie. 2016. Computer Age Statistical Inference. Cambridge University Press.

Fisher, R. A. 1934. “Two new properties of mathematical likelihood.” Proceedings of the Royal Society of London. Series A 144 (852): 285–307.

Jorgensen, Bert. 1987. “Exponential dispersion model.” Journal of the Royal Statistical Society. Series B: Methodological 49 (2): 127–62.

Lehmann, E. L., and G. Casella. 1998. Theory of Point Estimation, Second Edition. Springer.

Morris, Carl N. 1982. “Natural Exponential Families with Quadratic Variance Functions.” Annals of Statistics 10 (1): 65–80.

Nelder, J. A., and R. W. M. Wedderburn. 1972. “Generalized linear models.” Journal of the Royal Statistical Society. Series A: Statistics in Society 135 (3): 370–84.

Pace, Luigi, and Alessandra Salvan. 1997. Principles of statistical inference from a Neo-Fisherian perspective. Vol. 4. Advanced Series on Statistical Science and Applied Probability. World Scientific.

Robert, Christian P. 1994. The Bayesian Choice: from decision-theoretic foundations to computational implementation. Springer.